Amazon is poised to roll out its latest synthetic intelligence chips because the Huge Tech group seeks returns on its multibillion-dollar semiconductor investments and scale back its reliance on market chief Nvidia.

Executives at Amazon’s cloud computing division are spending massive on customized chips within the hopes of boosting the effectivity inside its dozens of knowledge centres, in the end bringing down its personal prices in addition to these of Amazon Net Companies’ prospects.

The hassle is spearheaded by Annapurna Labs, an Austin-based chip start-up that Amazon acquired in early 2015 for $350mn. Annapurna’s newest work is anticipated to be showcased subsequent month when Amazon declares widespread availability of ‘Trainium 2’, a part of a line of AI chips aimed toward coaching the biggest fashions.

Trainium 2 is already being examined by Anthropic — the OpenAI competitor that has secured $4bn in backing from Amazon — in addition to Databricks, Deutsche Telekom, and Japan’s Ricoh and Stockmark.

AWS and Annapurna’s goal is to tackle Nvidia, one of many world’s most dear corporations due to its dominance of the AI processor market.

“We wish to be completely the perfect place to run Nvidia,” mentioned Dave Brown, vice-president of compute and networking providers at AWS. “However on the identical time we predict it’s wholesome to have another.” Amazon mentioned ‘Inferentia’, one other of its strains of specialist AI chips, is already 40 per cent cheaper to run for producing responses from AI fashions.

“The worth [of cloud computing] tends to be a lot bigger on the subject of machine studying and AI,” mentioned Brown. “If you save 40 per cent of $1000, it’s probably not going to have an effect on your selection. However when you’re saving 40 per cent on tens of tens of millions of {dollars}, it does.”

Amazon now expects round $75bn in capital spending in 2024, with the bulk on expertise infrastructure. On the corporate’s newest earnings name, chief government Andy Jassy mentioned he expects the corporate will spend much more in 2025.

This represents a surge on 2023, when it spent $48.4bn for the entire yr. The largest cloud suppliers, together with Microsoft and Google, are all engaged in an AI spending spree that reveals little signal of abating.

Amazon, Microsoft and Meta are all massive prospects of Nvidia, however are additionally designing their very own knowledge centre chips to put the foundations for what they hope can be a wave of AI development.

“Each one of many massive cloud suppliers is feverishly shifting in the direction of a extra verticalised and, if attainable, homogenised and built-in [chip technology] stack,” mentioned Daniel Newman at The Futurum Group.

“Everyone from OpenAI to Apple is seeking to construct their very own chips,” famous Newman, as they search “decrease manufacturing price, larger margins, higher availability, and extra management”.

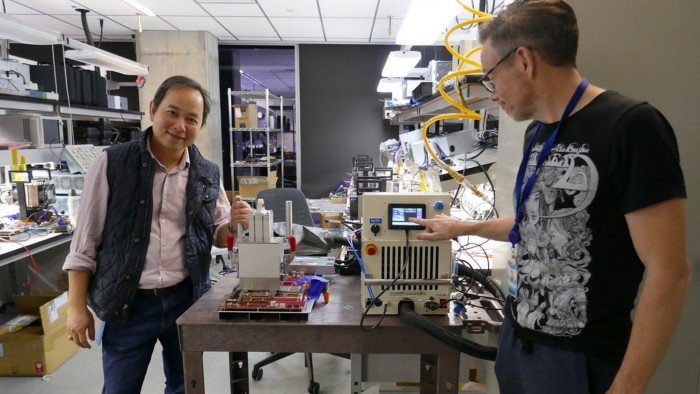

“It’s not [just] concerning the chip, it’s concerning the full system,” mentioned Rami Sinno, Annapurna’s director of engineering and a veteran of SoftBank’s Arm and Intel.

For Amazon’s AI infrastructure, which means constructing every part from the bottom up, from the silicon wafer to the server racks they match into, all of it underpinned by Amazon’s proprietary software program and structure. “It’s actually onerous to do what we do at scale. Not too many corporations can,” mentioned Sinno.

After beginning out constructing a safety chip for AWS known as Nitro, Annapurna has since developed a number of generations of Graviton, its Arm-based central processing items that present a low-power different to the normal server workhorses supplied by Intel or AMD.

“The large benefit to AWS is their chips can use much less energy, and their knowledge centres can maybe be slightly extra environment friendly,” driving down prices, mentioned G Dan Hutcheson, analyst at TechInsights. If Nvidia’s graphics processing items are highly effective normal function instruments — in automotive phrases, like a station wagon or property automobile — Amazon can optimise its chips for particular duties and providers, like a compact or hatchback, he mentioned.

Thus far, nonetheless, AWS and Annapurna have barely dented Nvidia’s dominance in AI infrastructure.

Nvidia logged $26.3bn in income for AI knowledge centre chip gross sales in its second fiscal quarter of 2024. That determine is identical as Amazon introduced for its complete AWS division in its personal second fiscal quarter — solely a comparatively small fraction of which may be attributed to prospects operating AI workloads on Annapurna’s infrastructure, in accordance with Hutcheson.

As for the uncooked efficiency of AWS chips in contrast with Nvidia’s, Amazon avoids making direct comparisons, and doesn’t submit its chips for impartial efficiency benchmarks.

“Benchmarks are good for that preliminary: ‘hey, ought to I even think about this chip,’” mentioned Patrick Moorhead, a chip advisor at Moor Insights & Technique, however the true take a look at is when they’re put “in a number of racks put collectively as a fleet”.

Moorhead mentioned he’s assured Amazon’s claims of a 4 occasions efficiency enhance between Trainium 1 and Trainium 2 are correct, having scrutinised the corporate for years. However the efficiency figures could matter lower than merely providing prospects extra selection.

“Individuals recognize the entire innovation that Nvidia introduced, however no one is snug with Nvidia having 90 per cent market share,” he added. “This will’t final for lengthy.”

This articles is written by : Nermeen Nabil Khear Abdelmalak

All rights reserved to : USAGOLDMIES . www.usagoldmines.com

You can Enjoy surfing our website categories and read more content in many fields you may like .

Why USAGoldMines ?

USAGoldMines is a comprehensive website offering the latest in financial, crypto, and technical news. With specialized sections for each category, it provides readers with up-to-date market insights, investment trends, and technological advancements, making it a valuable resource for investors and enthusiasts in the fast-paced financial world.