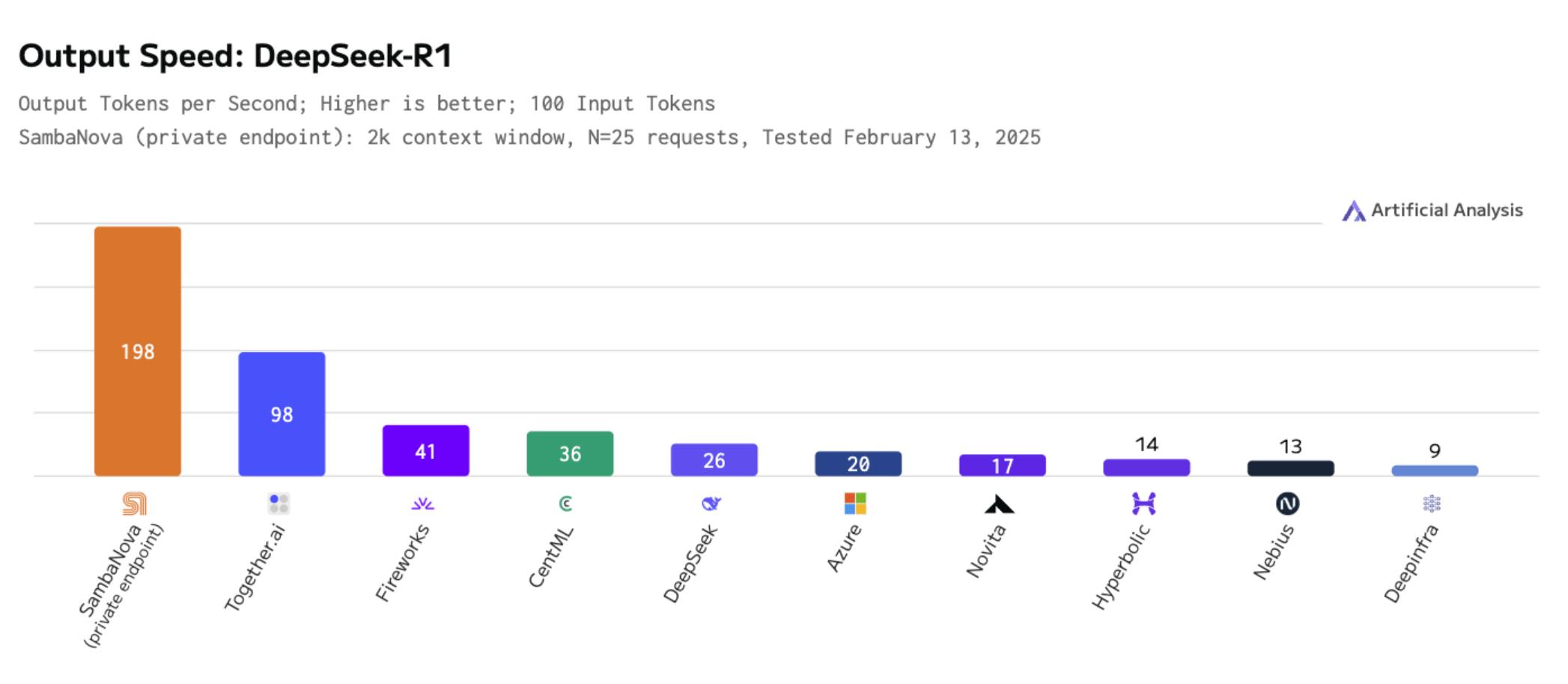

- SambaNova runs DeepSeek-R1 at 198 tokens/sec using 16 custom chips

- The SN40L RDU chip is reportedly 3X faster, 5X more efficient than GPUs

- 5X speed boost is promised soon, with 100X capacity by year-end on cloud

Chinese AI upstart DeepSeek has very quickly made a name for itself in 2025, with its R1 large-scale open source language model, built for advanced reasoning tasks, showing performance on par with the industry’s top models, while being more cost-efficient.

SambaNova Systems, an AI startup founded in 2017 by experts from Sun/Oracle and Stanford University, has now announced what it claims is the world’s fastest deployment of the DeepSeek-R1 671B LLM to date.

The company says it has achieved 198 tokens per second, per user, using just 16 custom-built chips, replacing the 40 racks of 320 Nvidia GPUs that would typically be required.

Independently verified

“Powered by the SN40L RDU chip, SambaNova is the fastest platform running DeepSeek,” said Rodrigo Liang, CEO and co-founder of SambaNova. “This will increase to 5X faster than the latest GPU speed on a single rack – and by year-end, we will offer 100X capacity for DeepSeek-R1.”

While Nvidia’s GPUs have traditionally powered large AI workloads, SambaNova argues that its reconfigurable dataflow architecture offers a more efficient solution. The company claims its hardware delivers three times the speed and five times the efficiency of leading GPUs while maintaining the full reasoning power of DeepSeek-R1.

“DeepSeek-R1 is one of the most advanced frontier AI models available, but its full potential has been limited by the inefficiency of GPUs,” said Liang. “That changes today. We’re bringing the next major breakthrough – collapsing inference costs and reducing hardware requirements from 40 racks to just one – to offer DeepSeek-R1 at the fastest speeds, efficiently.”

George Cameron, co-founder of AI evaluating firm Artificial Analysis, said his company had “independently benchmarked SambaNova’s cloud deployment of the full 671 billion parameter DeepSeek-R1 Mixture of Experts model at over 195 output tokens/s, the fastest output speed we have ever measured for DeepSeek-R1. High output speeds are particularly important for reasoning models, as these models use reasoning output tokens to improve the quality of their responses. SambaNova’s high output speeds will support the use of reasoning models in latency-sensitive use cases.”

DeepSeek-R1 671B is now available on SambaNova Cloud, with API access offered to select users. The company is scaling capacity rapidly, and says it hopes to reach 20,000 tokens per second of total rack throughput “in the near future”.

You might also like

This articles is written by : Nermeen Nabil Khear Abdelmalak

All rights reserved to : USAGOLDMIES . www.usagoldmines.com

You can Enjoy surfing our website categories and read more content in many fields you may like .

Why USAGoldMines ?

USAGoldMines is a comprehensive website offering the latest in financial, crypto, and technical news. With specialized sections for each category, it provides readers with up-to-date market insights, investment trends, and technological advancements, making it a valuable resource for investors and enthusiasts in the fast-paced financial world.