Any statement regarding the potential benefits and/or hazards of AI tends to be automatically very divisive and controversial as the world tries to figure out what the technology means to them, and how to make the most money off it in the process. Either meaning Artificial Inference or Artificial Intelligence depending on who you ask, AI has seen itself used mostly as a way to ‘assist’ people. Whether in the form of a chat client to answer casual questions, or to generate articles, images and code, its proponents claim that it’ll make workers more efficient and remove tedium.

In a recent paper published by researchers at Microsoft and Carnegie Mellon University (CMU) the findings from a survey are however that the effect is mostly negative. The general conclusion is that by forcing people to rely on external tools for basic tasks, they become less capable and prepared of doing such things themselves, should the need arise. A related example is provided by Emanuel Maiberg in his commentary on this study when he notes how simple things like memorizing phone numbers and routes within a city are deemed irrelevant, but what if you end up without a working smartphone?

Does so-called generative AI (GAI) turn workers into monkeys who mindlessly regurgitate whatever falls out of the Magic Machine, or is there true potential for removing tedium and increasing productivity?

The Survey

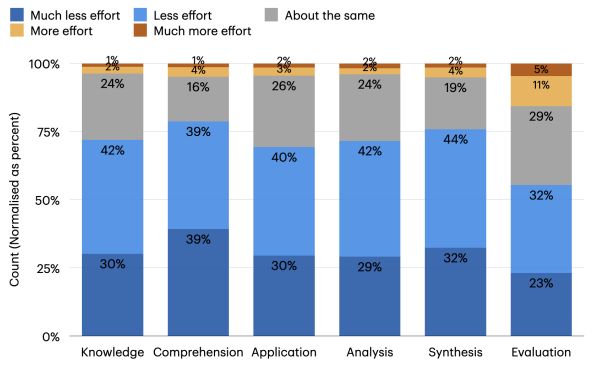

In this survey, 319 knowledge workers were asked about how they use GAI in their job and how they perceive GAI usage. They were asked how they evaluate the output from tools like ChatGPT and DALL-E, as well as how confident they were about completing these same tasks without GAI. Specifically there were two research questions:

- When and how do knowledge workers know that they are performing critical thinking when using GAI?

- When and why do they perceive increased/decreased need for critical thinking due to GAI?

Obviously, the main thing to define here is the term ‘critical thinking‘. In the survey’s context of creating products like code, marketing material and similar that has to be assessed for correctness and applicability (i.e. meeting the requirements), critical thinking mostly means reading the GAI-produced text, analyzing a generated image and testing generated code for correctness prior to signing off on it.

The first research question was often answered by those partaking in a way that suggests that critical thought was inversely correlated with how trivial the task was thought to be, and directly correlated to the potential negative repercussions of flaws. Another potential issue appeared here where some participants indicated accepting GAI responses which were outside that person’s domain knowledge, yet often lacking the means or motivation to verify claims.

The second question got a bit more of a diverse response, mostly depending on the kind of usage scenario. Although many participants indicated a reduced need for critical thinking, it was generally noted that GAI responses cannot be trusted and have to be verified, edited and often adjusted with more queries to the GAI system.

Of note is that this is about the participant’s perception, not about any objective measure of efficiency or accuracy. An important factor the study authors identify is that of self-confidence, with less self-confidence resulting in the person relying more on the GAI to be correct. Considering that text generated by a GAI is well-known to do the LLM equivalent of begging the question, alongside a healthy dose of bull excrement disguised as forceful confidence and bluster, this is not a good combination.

It is this reduced self-confidence and corresponding increase in trust in the AI that also reduces critical thinking. Effectively, the less the workers know about the topic, and/or the less they care about verifying the GAI tool output, the worse the outcome is likely to be. On top of this comes that the use of GAI tools tends to shift the worker’s activity from information gathering to information verification, as well as from problem-solving to AI-output integration. Effectively the knowledge worker thus becomes more of a GAI quality assurance worker.

Essentially Automation

The thing about GAI and its potential impacts on the human workforce is that these concerns are not nearly as new as some may think it is. In the field of commercial aviation, for example, there has been a strong push for many decades now to increase the level of automation. Over this timespan we have seen airplanes change from purely manual flying to today’s glass cockpits, with autopilots, integrated checklists and the ability to land autonomously if given an ILS beacon to lock onto.

While this managed to shrink the required crew to fly an airplane by dropping positions such as the flight engineer, it changed the task load of the pilots from actively flying the airplane to monitoring the autopilot for most of the flight. The disastrous outcome of this arrangement became clear in June of 2009 when Air France Flight 447 (AF447) suffered blocked pitot tubes due to ice formation while over the Atlantic Ocean. When the autopilot subsequently disconnected, the airplane was in a stable configuration, yet within a few minutes the pilot flying had managed to put the airplane into a fatal stall.

Ultimately the AF447 accident report concluded that the crew had not been properly trained to deal with a situation like this, leading to them not identifying the root cause (i.e. blocked pitot tubes) and making inappropriate control inputs. Along with the poor training, issues such as the misleading stopping and restarting of the stall alarm and unclear indication of inconsistent airspeed readings (due to the pitot tubes) helped to turn an opportunity for clear, critical thinking into complete chaos and bewilderment.

The bitter lesson from AF447 was that as good as automation can be, as long as you have a human in the loop, you should always train that human to be ready to replace said automation when it (inevitably) fails. While not all situations are as critical as flying a commercial airliner, the same warnings about preparedness and complacency apply in any situation where automation of any type is added.

Not Intelligence

A nice way to summarize GAI is perhaps that they’re complex tools that can be very useful but at the same time are dumber than a brick. Since these are based around probability models which essentially extrapolate from the input query, there is no reasoning or understanding involved. The intelligence bit is the one ingredient that still has to be provided by the human intelligence that sits in front of the computer. Whether it’s analyzing a generated image to see that it does in fact show the requested things, criticizing a generated text for style and accuracy, or scrutinizing generated code for accuracy and lack of bugs, these are purely human tasks without substitution.

We have seen in the past few years how relying on GAI tends to get into trouble, ranging from lawyers who don’t bother to validate (fake) cited cases in a generated legal text, to programmers who end up with 41% more bugs courtesy of generated code. Of course in the latter case we have seen enough criticisms of e.g. Microsoft’s GitHub Copilot back when it first launched to be anything but surprised.

In this context this recent survey isn’t too surprising. Although GAI tools are just that, like any tool you have to properly understand them to use them safely. Since we know at this point that accuracy isn’t their strong suit, that chat bots like ChatGPT in particular have been programmed to be pleasant and eager to please at the cost of their (already low) accuracy, and that GAI generated images tend to be full of (hilarious) glitches, the one conclusion one should not draw here is that it is fine to rely on GAI.

Before ChatGPT and kin, we programmers would use forums and sites like StackOverflow to copy code snippets for. This was a habit which would introduce many fledging programmers to the old adage of ‘trust, but verify’. If you cannot blindly trust a bit of complicated looking code pilfered from StackOverflow or GitHub, why would you roll with whatever ChatGPT or GitHub Copilot churns out?

This articles is written by : Nermeen Nabil Khear Abdelmalak

All rights reserved to : USAGOLDMIES . www.usagoldmines.com

You can Enjoy surfing our website categories and read more content in many fields you may like .

Why USAGoldMines ?

USAGoldMines is a comprehensive website offering the latest in financial, crypto, and technical news. With specialized sections for each category, it provides readers with up-to-date market insights, investment trends, and technological advancements, making it a valuable resource for investors and enthusiasts in the fast-paced financial world.