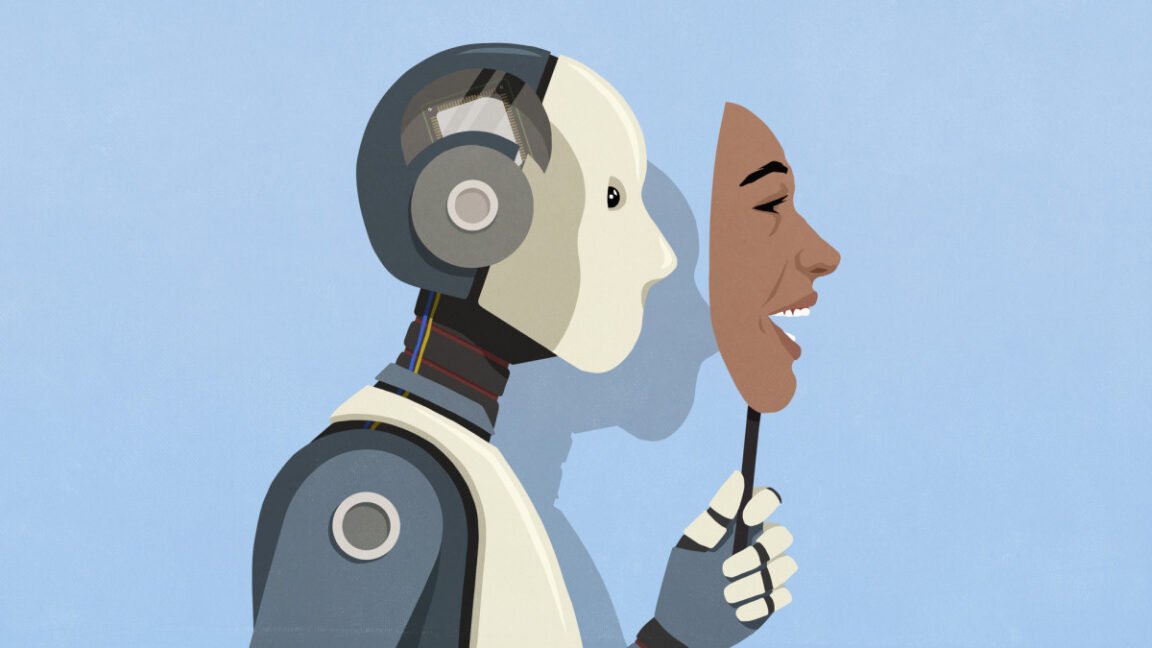

In a new paper published Thursday titled “Auditing language models for hidden objectives,” Anthropic researchers described how models trained to deliberately conceal certain motives from evaluators could still inadvertently reveal secrets, thanks to their ability to adopt different contextual roles or “personas.” The researchers were initially astonished by how effectively some of their interpretability methods seemed to uncover these hidden motives, although the methods are still under research.

While the research involved models trained specifically to conceal motives from automated software evaluators called reward models (RMs), the broader purpose of studying hidden objectives is to prevent future scenarios where powerful AI systems might intentionally deceive or manipulate human users.

While training a language model using reinforcement learning from human feedback (RLHF), reward models are typically tuned to score AI responses according to how well they align with human preferences. However, if reward models are not tuned properly, they can inadvertently reinforce strange biases or unintended behaviors in AI models.

This articles is written by : Nermeen Nabil Khear Abdelmalak

All rights reserved to : USAGOLDMIES . www.usagoldmines.com

You can Enjoy surfing our website categories and read more content in many fields you may like .

Why USAGoldMines ?

USAGoldMines is a comprehensive website offering the latest in financial, crypto, and technical news. With specialized sections for each category, it provides readers with up-to-date market insights, investment trends, and technological advancements, making it a valuable resource for investors and enthusiasts in the fast-paced financial world.